Total Recall? Or The Lawnmower Man? Find out in the latest podcast ep…

This week on VFX Firsts, I’m joined by Marta Svetek to discuss the first motion capture done for a film. Marta is an actor, motion capture performer and senior digital marketing and community manager at Maxon. She’s repped by The Mocap Agency.

As you’ll hear, one of the films we discuss certainly employed mocap in the shoot, but not in the final shots. Then, the other film we talk about leapt upon mocap for a particular FX solution. There’s a lot more detail in the show notes, below, plus a bonus interview with the original mocap team.

Listen to Marta and I on Apple Podcasts or Spotify, or directly in the embedded player here. Also, here’s the RSS feed.

Show notes

Some early short project/commercial breakthroughs

– ‘Brilliance’ (1985), from Robert Abel and Associates

– ‘Don’t Touch Me’ from Kleiser-Walczak: article at befores & afters

– Other breakthrough moments: The Stick Man (1967), Adam Powers, The Juggler (1981)

Total Recall (1990)

– Behind the scenes article at befores & afters

– Video featurette (see from 8min in)

The Lawnmower Man (1992)

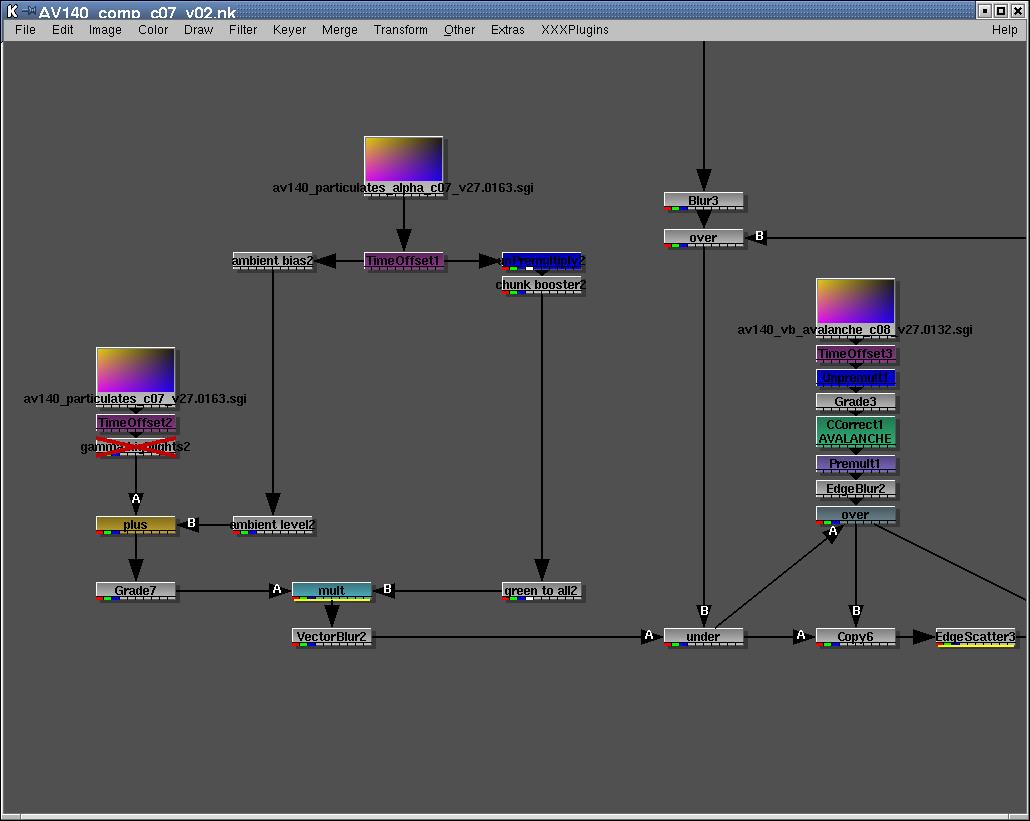

Here, Homer & Associates worked on a shot of the priest being set on fire. The studio used particle system techniques and a motion capture system brought over from Italy, which had been developed by Francesco Chiarini and Umberto Lazzari. The actor was sampled in a motion capture lab which had 4 infrared cameras, the actor had Scotchlite markers on him and the system would track those, and from that data they could then animate a CG figure and apply the particles software.

Homer & Associates later used similar techniques for Peter Gabriel’s music video for Steam, also adopting the same motion capture approach. I happened to have interviewed Francesco and Umberto for an unpublished interview about Steam, which I now present here!

How did you get started in motion capture?

Francesco Chiarini: We had a production company, SuperFluo in Bologna, and in the mid 80s started to develop a complete CGI system, from modeling to rendering: back then there was no commercial software yet. While developing this system we found out via our friend Roberto Maiocchi that the Polytechnic was developing a real time image processor (called ELITE) to extract with an image correlation algorithm the signature of retro-reflective markers, and that a startup company called BTS was developing applications in the bio-mechanical / medical field for this device. With SuperFluo, we joined forces to develop applications for the entertainment industry. After a few experimental mocap animations (show at Siggraph 1990 in Dallas TX), a couple of years later, in summer 1990, the CG animated station break “Rai Ciao GO” for the World Soccer Championship “Italia ’90” was the very first commercial use of motion capture. It was broadcasted worldwide and was our best credential to start working in Hollywood in 1991: The Lawnmower Man (1992) the first movie with mocap animation.

Can you talk about how SuperFluo worked? What components made up both the mocap gear worn by the performer, and the hardware and software?

Francesco Chiarini: The ELITE processor was streaming 2D co-ordinates (corresponding to the markers for each frame for each camera) to a PC for recording. The post processing on the PC included a calibration step to obtain the cameras’ 3D positions and orientations, and then the labeling and tracking of the performer’s markers that was followed by the 3D reconstruction by means of triangulation (photogrammetry). For body mocap an additional software layer converted the plain 3D coordinates in a hierarchical data structure defined by a “skeleton” with joints, for each joint 2 or 3 (as needed) angles of rotation were computed, each joint depended on the previous one (the wrist depending on the elbow in turn depending on the shoulder, and so forth) all the way to a root point (say the belly button) that defined the CG character position in space.

Back then we did not use a mocap suit, we attached the markers directly to the skin as it was done in the medical field with hypoallergenic double sticky tape. At the beginning we had the most basic system with only 2 cameras, this implied extreme limitations, like the performer could not rotate much or one or more markers would not be visible by both cameras at the same time, that was necessary for 3D triangulation. When we got a 4 camera system things got only slightly better, and we understood the hard way that a huge number of cameras was necessary: a few years later dozens of cameras were used for the job by the industry.

Umberto Lazzari: We also had tools for filtering noise and for filling occlusion gaps. In addition to the hierarchical format we could export the data to global 3D positions or interpolation values (for driving facial blend shapes).

What are your memories of the motion capture sessions for Peter Gabriel’s Steam?

Francesco Chiarini: We recorded motion capture for 2 days, the shooting planning was minimal and the director Steven Johnson was having new ideas all the time. We ended with almost 200 takes, but many of them were not usable because of the data loss due to system limitation.

My main memory is about dealing physically with Peter Gabriel as I was the one sticking markers on his bare skin. He was extremely friendly and easy, and when I warned him that I was going to hurt him when removing the markers, as body hairs would be pulled out with the double sticky tape, he smiled at me and replied: “pain is not always a bad thing ….. ”

Peter Gabriel seemed very happy in trying the motion capture technology and enthusiastic about CG. He was very nice and humble: every time sitting with us and the rest of the crew on the stage’s floor eating take out food: not exactly your standard Rock Star!

We delivered the data with hierarchical data structure, mentioned above, by setting up a corresponding CG character rig in the animation environment of choice, as Umberto can further explain.

Umberto Lazzari: Both Peter Gabriel and the African dancer were simply amazing. There was a special energy during the session as we knew that we were pioneering a new technology with lots of potential but still many limitations. Also Peter asked that there be no difference between performers and crew so everybody had to dance to the music while we were capturing them.

For both scenes we had to capture Peter and the dancer separately. We also had to break the sequences into short segments so we could get manageable and accurate data.

The captured data was provided to Peter Conn in the hierarchical format explained by Francesco. To Brad de Graf we delivered global 3D positions to use with Softimage Inverse kinematics.

What were the challenges, back then, of generating realistic and usable mocap data?

Francesco Chiarini: Motion capture had a bad reputation and we had to fight against this when we got to California. All the passive optical motion capture technologies back then (BTS, VICON, Motion Analysis) were developed for research in the medical research field (not even as a diagnostic tool in hospitals) without facing the extremely complex needs of the entertainment industry.

Aside from needing more cameras to capture more complicated performances (as I mentioned before) we as SuperFluo were the only one able to provide a robust and reliable service. Also SuperFluo had its own custom CGI SW system so we were knowledgeable about all the nuances of the CG pipeline: this made us even more unique.

Umberto Lazzari: We had to overcome the many technical limitations of a system conceived mainly for gait analysis medical application. When we did the project our 4-camera system operated as two 2-camera systems in parallel and we had to split the volume in two halves: front and back or right and left sides.

For the right and left sides setup Francesco built special rigs (antennas) with markers for capturing the spine movement. Other limitations were the number of frames that we could capture in one take and the size of the volume that we could cover with 4 cameras to get manageable and accurate data.

So there you have it, thanks again for listening to VFX Firsts!